Improving Transformation Performance

In most cases, slow, memory-consuming translations are caused by group-based transformers.

Remember that in feature-based transformation a transformer performs an operation on a feature-by-feature basis where a single feature at a time is processed. Such a transformation only takes as much memory as is necessary to store a single feature.

However, a group-based transformer performs an operation on a group or collection of features and it takes as much memory as is necessary to store all features of the group!

It is this grouping of data that causes performance degradation.

| Jake Speedie says…. |

| You’ll get better performance when you put the least amount of data into a group-based transformer as possible. For example, put feature-based filter transformers BEFORE the group-based process, not after it (see following exercise). Another technique is to make group-based transformers more feature-based... |

Making Group-based Transformers more Feature-based

Obviously, when a group-based transformer is needed, then it must be used. However, many group-based transformers have a parameter that, in effect, makes them more feature-based.

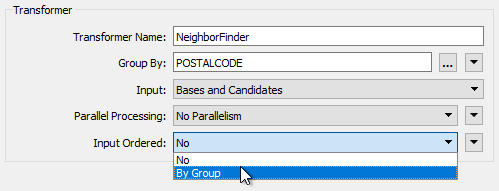

One common parameter is called "Input Ordered" and appears near the Group By parameter in most transformer dialogs:

The condition for applying these is that the groups of features are pre-sorted into their groups. When this is the case, and I can set this parameter to By Group, then FME is able to process the data more efficiently.

For example, in the above screenshot the user is using postal code as a group-by parameter (i.e. each address looks for it's nearest neighbor in the same postcode). If the incoming data is already sorted in order of postcode then the user can set the Input Ordered parameter and allow FME to treat this more like a feature-based transformer.

| Jake Speedie says... |

|

Let's think back to the airport departure gate boarding passengers. Most airlines first call passengers who require assistance boarding, then passengers with children, business-class passengers, and finally economy passengers (starting with passengers at the front). That's because it's easier to board passengers when they are sorted into similar groups.

The same applies to FME. It's not as simple to handle passengers (or spatial features) when they arrive in a random order. Ordered is more efficient. |

Besides the "Input Ordered" parameter, some transformers have their own, unique, parameters for performance improvements.

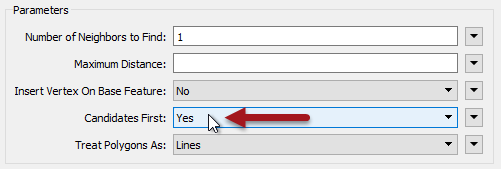

For example, the NeighborFinder transformer expects two sets of data: Bases and Candidates. By default FME caches all incoming Bases and Candidates because it needs to be sure it has ALL of the candidates before it can process any bases.

But, if FME knows the candidate features will arrive first (i.e. the first Base feature signifies the end of the Candidates) then it doesn’t need to cache Base features. It can process them immediately because it knows there are no more candidates that it could match against.

The user specifies that this is true using the parameter Candidates First:

Look at this log file for a workspace that uses a NeighborFinder. By default it looks like this:

Translation was SUCCESSFUL with 0 warning(s) (13597 feature(s) output) FME Session Duration: 29.6 seconds. (CPU: 27.7s user, 1.5s system) END - ProcessID: 28540, peak process memory usage: 231756 kb

With Candidates First turned on it looks like this:

Translation was SUCCESSFUL with 0 warning(s) (13597 feature(s) output) FME Session Duration: 28.4 seconds. (CPU: 27.4s user, 0.8s system) END - ProcessID: 26429, peak process memory usage: 178412 kb

It’s about 5% faster than before, but more importantly it’s used nearly 25% less memory!

But how does a user ensure the Candidate features arrive first? Well, like writers you can change the order of readers in the Navigator, so that the reader at the top of the list is read first.

It doesn’t improve performance per se, but it does let you apply performance-improving parameters like the above.

| Miss Vector says… |

|

Which of these transformers have group-related parameters for improving performance (pick all that apply and see if you can get the answers without looking at the transformers):

1. StatisticsCalculator 2. SpikeRemover 3. PointCloudCombiner 4. FeatureMerger |