Authoring Job Chains

Workflow Management is a technique for controlling workspaces in sequence or branching with in-built logic. Part of this technique is being able to author workspaces that are "chained together" to run one after another.

What are Job Chains?

A chain of jobs is one that is run in sequence one after the other. There are various ways to implement this.

The FeatureWriter Transformer

The easiest way to chain workspaces... is not to! A chain is often necessary because one workspace writes data that the next must then process. However, the FeatureWriter transformer allows data to be written and then further transformation to take place within the same workspace!

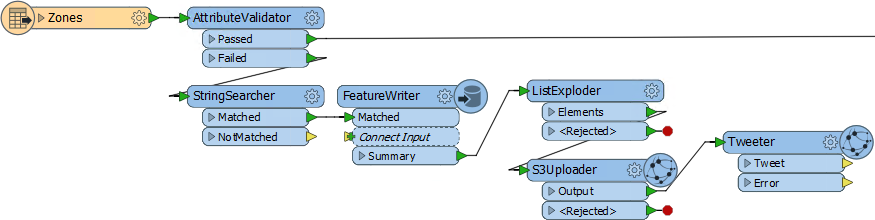

In the above workspace, data is validated and validation errors are written out to a series of datasets according to the error type. These datasets are uploaded to Amazon S3 and a tweet sent to alert someone to the problems.

Without the FeatureWriter, such a project might have taken two or maybe three workspaces chained together. Here it only requires one.

A Simple Chain

It's fairly simple to get one workspace to run another; all that is needed is a transformer or shutdown script to send the command. Given that each workspace in FME Server can be run using a URL, and that there is a REST API to do similarly, it's quite simple to run an HTTP command using an HTTPCaller transformer or a shutdown script.

Alternatively, an FMEServerJobSubmitter transformer can be used. This transformer triggers a workspace to run on FME Server. It has various parameters that allow the author to define which workspace on which FME Server is to be run.

By adding this transformer to multiple workspaces a chain of almost any length can be created.

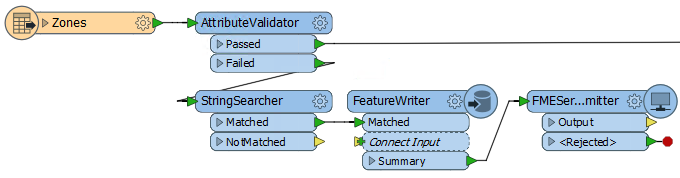

In this workspace the data is sent to a FeatureWriter transformer, and the results of that sent as a single summary feature that triggers the FMEServerJobSubmitter.

However, don't think a FeatureWriter is a necessity for a chain of data. It's equally valid for a step in the chain to not write data, but just carry out an action and trigger the next step.

| Sister Intuitive says… |

|

The first workspace in a chain may start with a Reader that reads a source dataset, where each source feature triggers the FMEServerJobSubmitter. For example, the source data may be a list of files that are to be translated.

But more often the next job needs to be triggered just once. This requires only a single feature and to produce this a Creator transformer can be used instead of a Reader, or a Sampler transformer used to restrict the flow of features to a single one. |

A Parent-Child Approach

Instead of a chain of workspaces where one calls the next, a different approach is to have a control (parent) workspace that runs a series of (child) workspaces in turn.

Like in a simple chain, a master workspace runs other workspaces by using a transformer such as the FMEServerJobSubmitter.

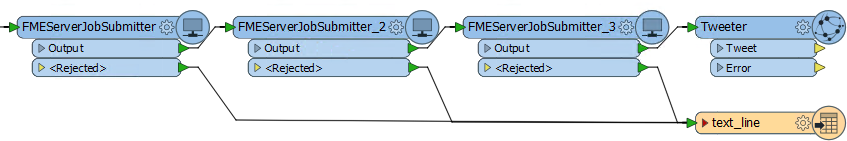

Here a control workspace is using the FMEServerJobSubmitter to run three further FME workspaces. Maybe each workspace is a separate step in a database update process:

If a particular task fails then the output is routed to a text file Writer – meaning this could be used in a notification system to send an email to an administrator alerting them to the failure. Successfully executing all three results in a tweet being sent.

Instead of "Parent-Child", this setup is sometimes also known as the "Master-Slave" approach.

Conditional Processing

In some scenarios there might be several workspaces, only one of which should be run. To do so the logic for deciding which workspace is executed can be made using a Tester, or other filter transformers.

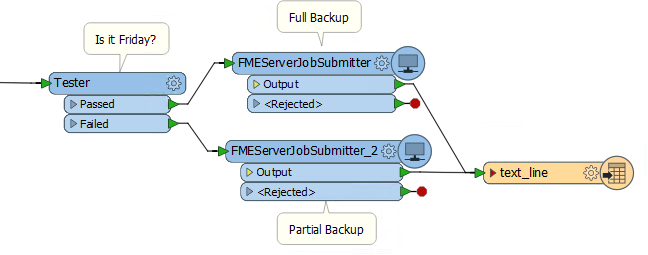

For example, here an organization runs a daily process to upload field updates into a database. Once a week it does the same, but also exports all files to Dropbox (for example):

The test is carried out by a Tester, and the daily/weekly processes are each defined in a separate workspace.

FMEServerJobSubmitter and Portability

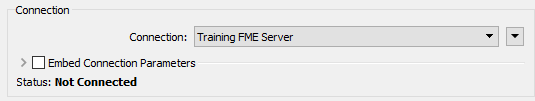

The FMEServerJobSubmitter transformer allows the selection of an FME Server connection - in the same way as the Workspace Publishing wizard:

However, many FME Server projects include not one, but two servers: One for development and testing and the other the live system.

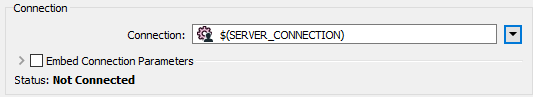

If you develop a solution using an FMEServerJobSubmitter that connects to MyFMEServerDev (for example) then, to save having to manually change each transformer MyFMEServerLive, you can make the connection into a user parameter:

| Police Chief Webb-Mapp says... |

|

Another method would be to use the FME Server parameters FME_SERVER_HOST and FME_SERVER_PORT.

You would need to embed the connection parameters (rather than using a predefined connection) and set the FME Server URL to $(FME_SERVER_HOST):$(FME_SERVER_PORT) That way the workspace would function depending on which Server it was published to, instead of having to set a published parameter. However, it does mean you couldn't test it on FME Desktop and each child (slave) workspace would need to be on the same FME Server as the parent (master). |

| WARNING |

|

Interestingly the initial/control/parent workspace can be run on either FME Desktop (e.g. Workbench) or FME Server. The FMEServerJobSubmitter works on both platforms. So you can trigger the entire process to run on Server using a control that is executed on Desktop.

However, there's a difference. On FME Desktop the control workspace runs immediately, but each child job executed by an FMEServerJobSubmitter transformer is submitted to the FME Server queue and may have to wait for an engine. On FME Server - if you have Wait for Job to Complete = Yes - it's the reverse: the control workspace is submitted to the queue, but each child job executed by an FMEServerJobSubmitter bypasses the queue and runs immediately. This means that on Desktop the child processes are affected by the FMEServerJobSubmitter Job Priority and Job Tag parameters. But on Server (when Wait for Job = Yes) those parameters are ignored because the child processes are run immediately and not queued. In short, those FMEServerJobSubmitter parameters only apply when the call comes from FME Desktop, because only then are the jobs queued. |